Solutions

Solutions

Products

Products

Services

Services

Industries

Industries

Resources

Resources

About us

About us

Get started

Tool-driven agents retrieve surface-level data, while grounded reasoning agents trace causal lineages—shifting the focus from simple evidence collection to true industrial Root Cause Analysis.

Modern industrial environments generate enormous volumes of telemetry, logs, and domain-specific data. When an anomaly appears—pressure spikes, pump trips, throughput declines—engineers need more than surface-level answers. They need to know why it happened. This is where Root Cause Analysis (RCA) becomes essential, and where most AI agents relying solely on “MCP tools” fall short.

In this issue, we explore how deep-rooted investigation differs from simple tool calling, what MCP actually enables beyond the “tool” misconception, and how BKOAI builds grounded agent architectures capable of tracing failures back to their true origin. We close with a real industrial case study demonstrating this workflow in practice.

In energy and industrial systems, failures rarely have a single cause. A pressure alarm may be triggered by a faulty sensor, which itself is responding to a heat exchanger fouling event, which is tied to upstream flow instability caused by a compressor recycling. Shallow agents that simply call tools—query a database, retrieve a log, run a calculation—can collect evidence, but they cannot assemble a causal story.

Traditional RCA requires navigating multiple layers of interconnected information: equipment hierarchies, historical work orders, cross-asset dependencies, operating envelopes, time-aligned logs, and even tribal engineering knowledge. Without grounding agent reasoning in these structures, AI tends to produce fragmented summaries rather than genuine explanations.

This is the gap between a tool-driven agent and a grounded reasoning agent.

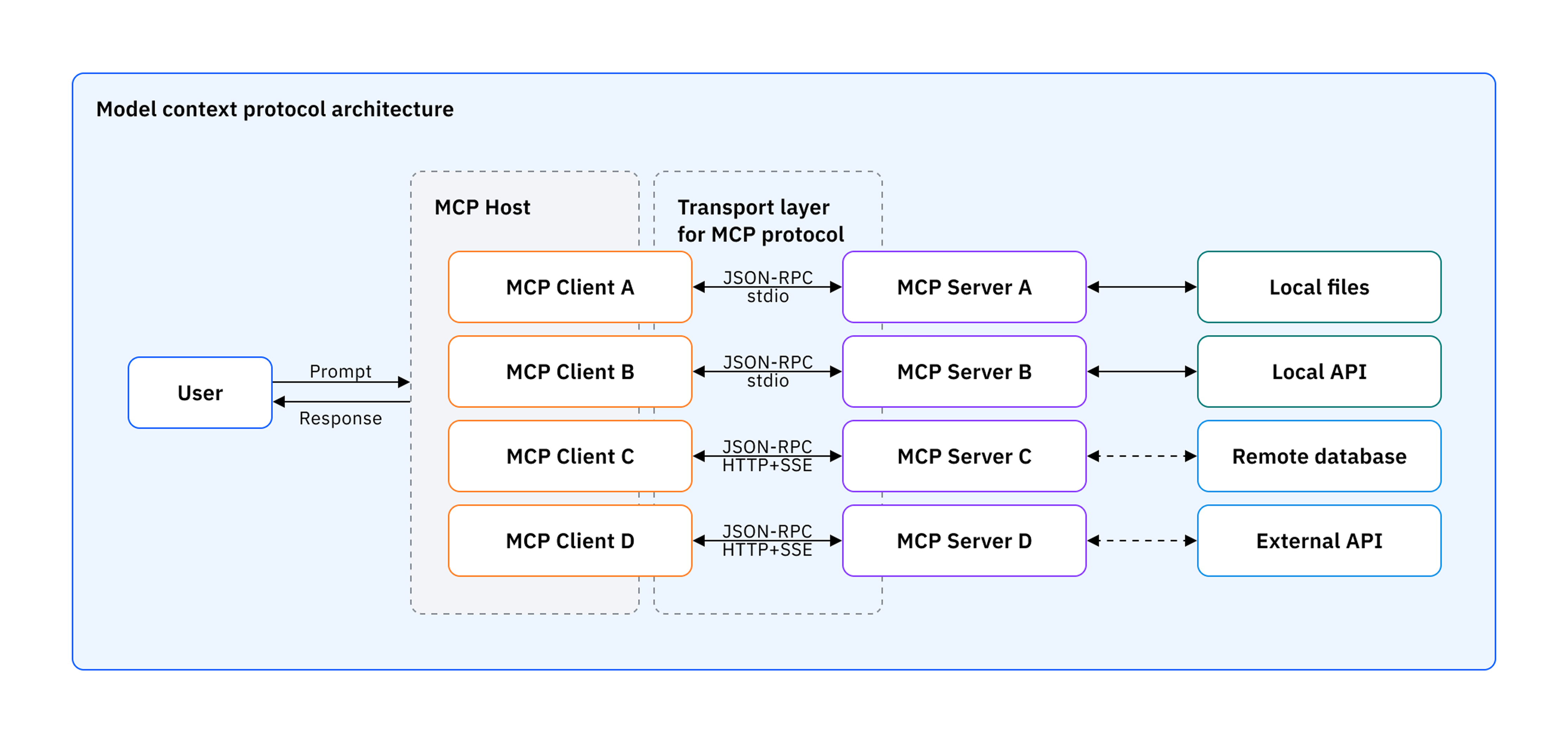

A common misconception is that MCP is simply a unified interface for “tools.” In reality, MCP introduces a deeper architectural shift. It standardizes not only the execution of functions, but also access to resources—the core data systems that encode enterprise knowledge—and prompts that formalize reasoning pathways.

When implemented fully, MCP allows an agent to retrieve structured information from graph databases, historical logs, sensor systems, and documentation repositories, and then apply domain-specific reasoning templates that reflect how engineers approach analysis.

This distinction is crucial. Tools alone fetch data. MCP resources contextualize data. MCP prompts instruct the model how to reason over it.

Grounded decision-making emerges only when an agent can navigate all three dimensions: access → interpretation → synthesis.

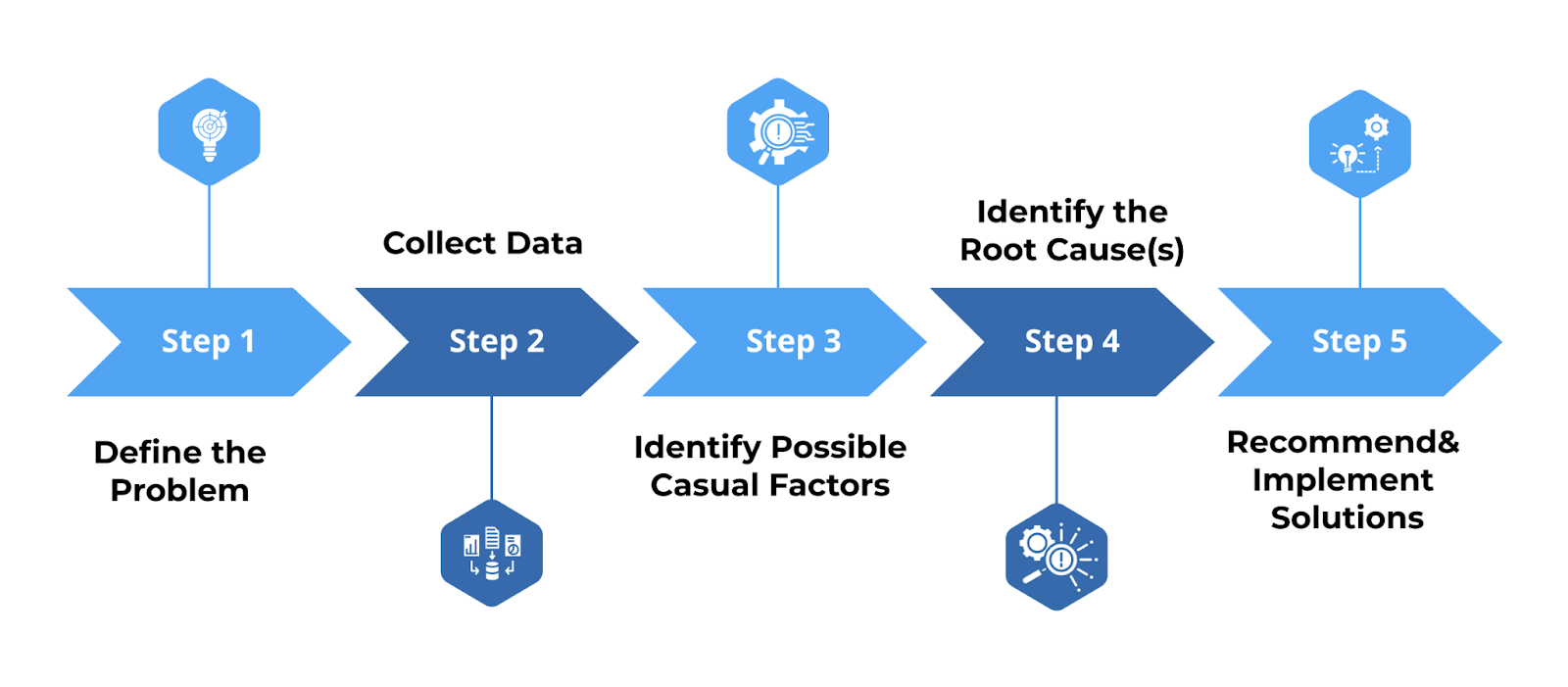

BKOAI’s grounded agent architecture builds deeply on MCP resources and structured reasoning prompts. The agent begins not by guessing, but by forming a hypothesis space, pulling targeted data from multiple sources, and refining its understanding as new evidence arrives.

For example, imagine an alert such as: “Temperature rising in Pump P-210.” A shallow agent would query a table, return a list of historical events, and stop there.

A grounded agent behaves differently. It retrieves failure modes from a graph database, correlates maintenance logs for recurring patterns, interprets equipment metadata to identify upstream and downstream dependencies, and reads simulation data to understand whether current operating conditions match historical failure scenarios.

This is not tool calling—it is iterative investigation.

The agent progresses through a “why-chain,” using MCP prompts designed to evaluate competing hypotheses and discard those that do not fit available evidence. The output is not a summary of data sources; it is a defensible causal explanation.

One example of grounded reasoning in action is BKOAI’s Simulation-Aware Failure-Mode-and-Effects-Analysis (FMEA) workflow. Many industrial FMEA systems remain static—lists of failure modes encoded in spreadsheets or isolated databases. This makes RCA difficult when conditions deviate from nominal or when multiple subsystems are interacting in a way the original FMEA never anticipated.

BKOAI’s system integrates FMEA data with simulation models, process conditions, logs, and historical records, enabling the AI agent to build a dynamic picture of evolving risk. When an engineer asks a targeted question—such as “The ventilation fan is excessively vibrating. What should I do?”—the agent grounds its reasoning in real evidence rather than generic pattern matching.

It identifies documented overheating failure modes in the FMEA graph, compares them with semantic insights extracted from manuals, correlates relevant maintenance histories, evaluates current sensor conditions against simulation envelopes, and produces a synthesized explanation capturing not just what is happening, but why, how likely, and under what conditions it may worsen.

This demonstrates how grounded agentic reasoning transforms RCA from a static checklist into a living, adaptive decision engine.

As agent architectures continue to evolve, the need for grounded reasoning will only intensify. Tool-driven agents may retrieve information quickly, but only grounded agents—those leveraging MCP resources, structured prompts, and iterative causal reasoning—can deliver explanations that industrial operations can trust.

Our upcoming research will explore how grounding strategies extend into native agentic AI, multi-agent collaboration, and automated hypothesis testing, especially in complex industrial ecosystems.