Solutions

Solutions

Products

Products

Services

Services

Industries

Industries

Resources

Resources

About us

About us

Get started

Workflow agents deliver structured reliability, while native agentic AI provides adaptive reasoning—together defining the core architecture of modern industrial AI systems.

As agent systems mature, one architectural question increasingly determines whether organizations can deploy AI at scale: how should an agent break down and execute a complex task? In earlier issues, we explored the reasoning and coordination layers of agentic systems—how agents plan, use MCP to access enterprise data, and collaborate through A2A.

This edition moves one level deeper into the execution layer and examines the distinction between workflow agents and native agentic AI, a distinction that is shaping system reliability, cost, and adaptability across industrial deployments.

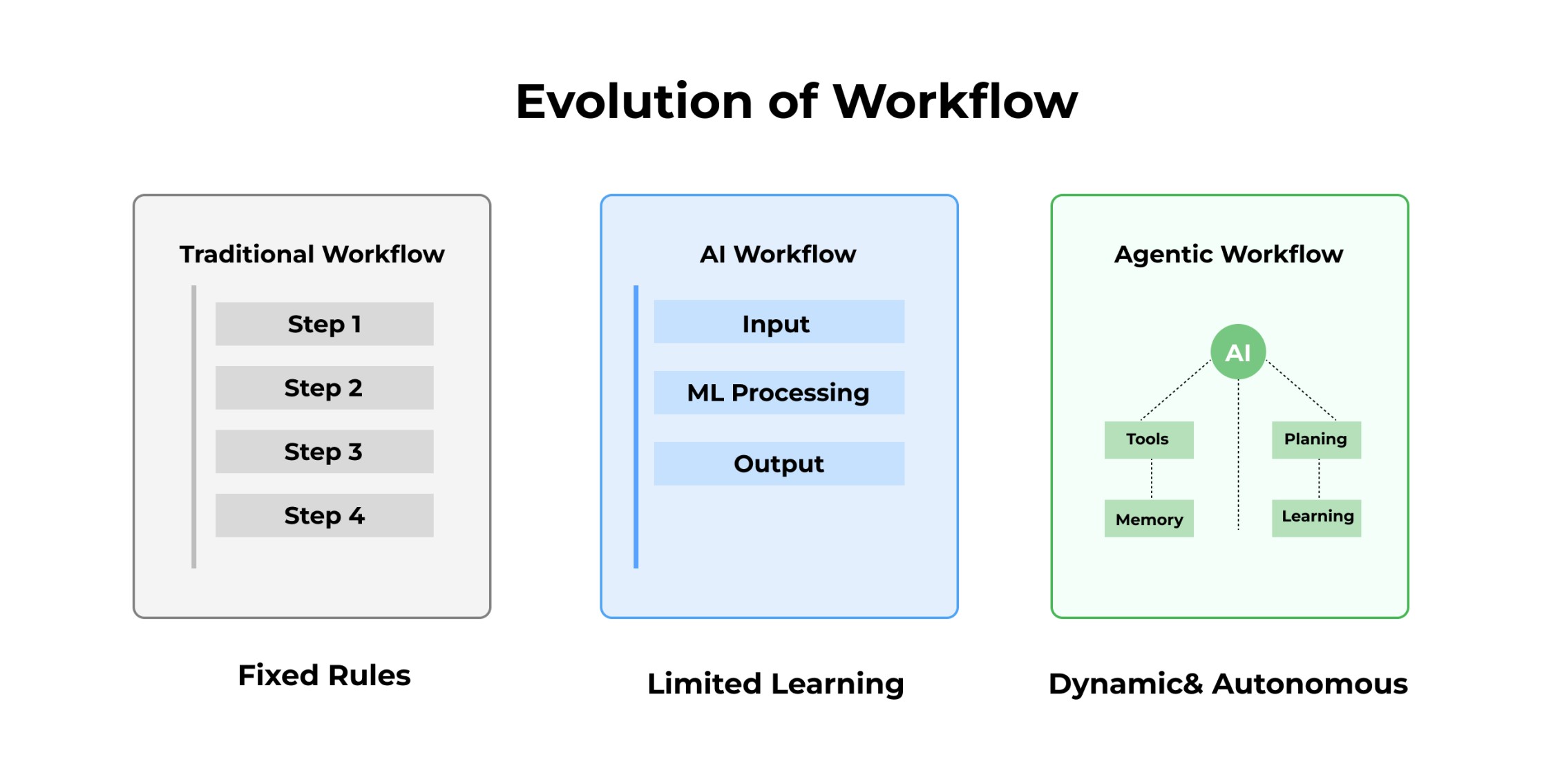

Before diving into native agentic AI, it’s important to understand how workflow-based intelligence has evolved. Many enterprise systems still rely on workflows as their primary execution model, but the nature of those workflows has changed dramatically. We’ve moved from rule-based sequences that execute fixed steps, to AI-enhanced flows that incorporate machine learning, and ultimately toward agentic workflows that can plan, reason, and coordinate multiple components. Workflow Agents sit precisely at this inflection point—bridging structured automation with autonomous decision-making.

Every workflow agent begins with task decomposition- breaking a user's objective into steps that the system executes in sequence. The key difference lies in who designs those steps. A workflow agent decomposes a user goal into subtasks and executes them through a coordinated pipeline involving LLM calls, tools, databases, and domain-specific logic. This makes workflow agents highly reliable, auditable, and well-suited for industrial environments where consistency and traceability are essential.

In practice, Workflow Agents excel when:

Rather than inventing a new plan every time, a workflow agent runs a carefully designed orchestration, combining the strengths of automation and LLM reasoning inside a controlled architecture.

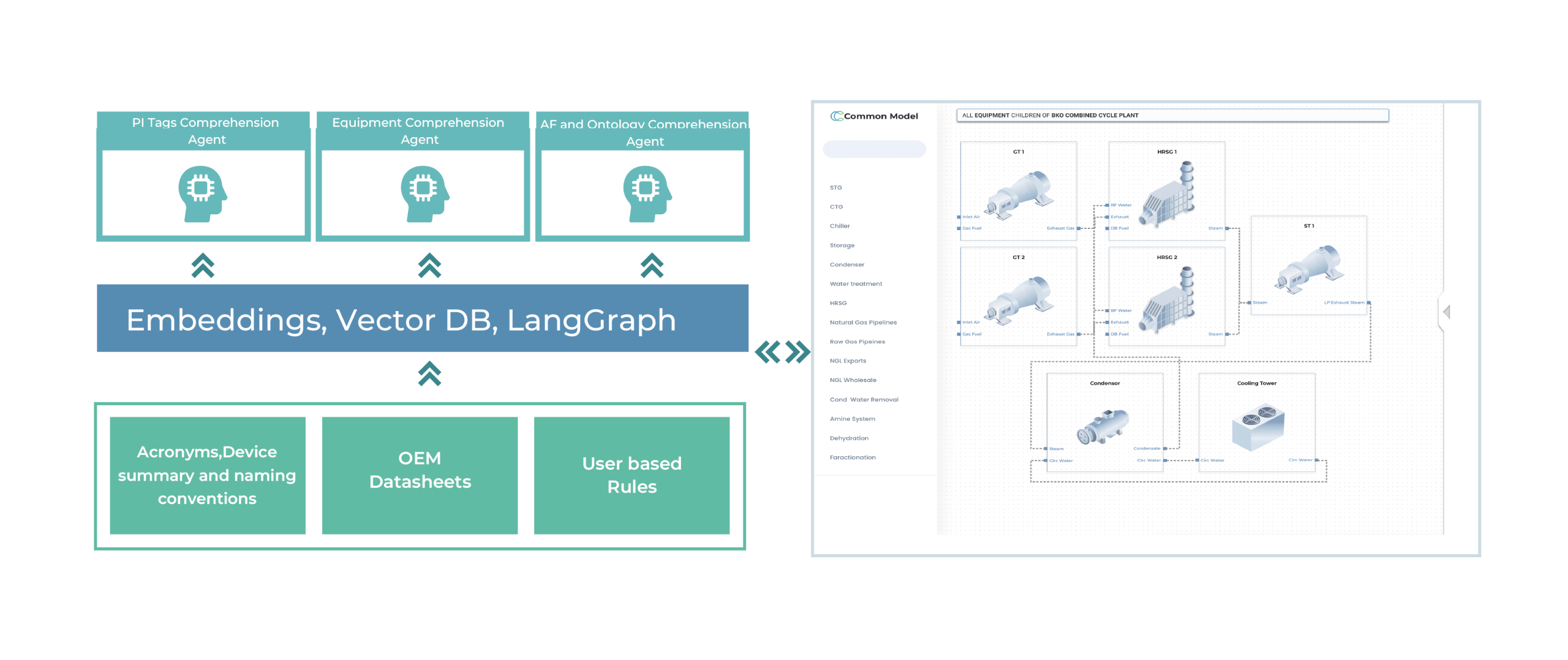

One example of workflow-style agentic AI in production is the BKOAI Contextualization Agent for Equipment Mapping.

Large industrial facilities often contain tens of thousands of raw sensor and engineering “tags”—such as T-101-Feed-Temp—spread across SCADA systems, PI servers, maintenance databases, and engineering documents. Asking an LLM to map all these tags to the correct equipment hierarchy would overwhelm the model and cause context rot, where important information gets lost inside massive input windows.

The result is a continuously evolving equipment knowledge graph that unifies PI tags, documentation, and engineering hierarchies—turning previously disconnected industrial data silos into an organized layer of operational intelligence.

This illustrates how workflow agents excel in large-scale, high-precision industrial tasks: by providing structure, repeatability, and domain-aligned reasoning rather than relying solely on free-form LLM planning.

Read more:https://www.bkoai.com/use-cases/pi-mapping-intelligence-system

But not all problems can be captured through fixed steps. Many industrial tasks—root-cause investigation, risk assessment, anomaly triage—require open-ended exploration of evidence, integration of live operational data, and reasoning that adapts to new conditions.

This is where native agentic AI becomes essential.

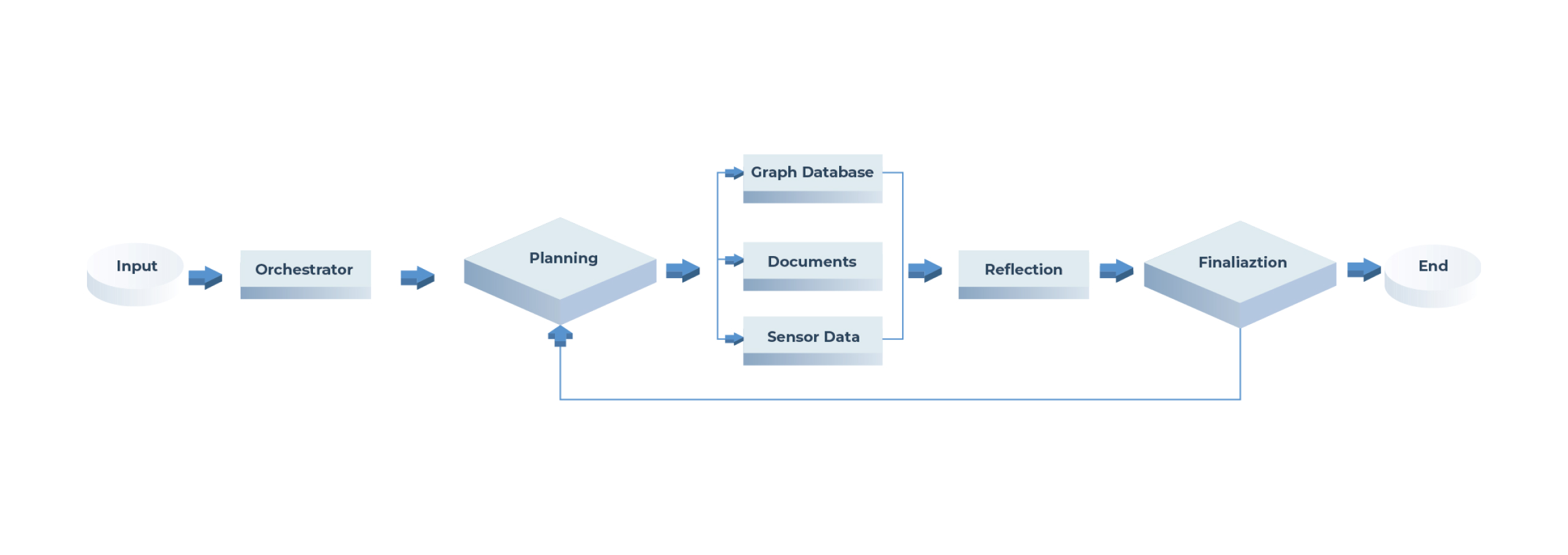

Native agentic systems do not follow a preset workflow. Instead, they autonomously decide how to break down a problem, what tools to call, what information to retrieve, and how to synthesize it. They demonstrate planning, memory, self-correction, iteration, and multi-hop reasoning—capabilities that allow them to operate in dynamic, ambiguous industrial environments.

The native workflow approach addresses the limitations of preset workflows in terms of generalization and adaptability, enabling it to handle a wider range of scenarios. However, this comes at a cost: execution is slower, token consumption is higher (since task decomposition typically relies on state-of-the-art reasoning models), and stability after deployment is lower. As a result, more bad cases may occur in practice, requiring additional debugging.

Traditional FMEA processes rely heavily on static spreadsheets and manual lookup. When equipment behaves unexpectedly, engineers must search through manuals, maintenance logs, simulation outputs, and sensor streams—often losing context across systems.

BKOAI’s Simulation-Aware FMEA System introduces a Deep Search Agent that breaks this pattern. When an engineer asks, “What is the overheating risk of the hydraulic pump?”, the agent pulls from the knowledge graph, extracts semantic conditions from manuals, identifies historical patterns from maintenance logs, interprets live operating conditions from sensor and simulation data, and applies predictive models to estimate near-term risk. It continuously integrates and refines evidence, eventually presenting a coherent, explainable conclusion.

This is not a workflow. It is an agent actively reasoning—choosing which tools to use, interpreting ambiguity, reconciling conflicting signals, and producing an analysis that resembles human engineering judgment.

Read more:https://www.bkoai.com/use-cases/simulation-aware-fmea-analysis

Industrial environments require both patterns of intelligence. Workflow agents bring stability, scalability, and control—critical for routine operations. Native agentic AI brings adaptability, investigation, and depth—critical for solving problems that do not have a predefined path.

Together, they form the architectural foundation of modern agentic systems and are central to how BKOAI designs industrial intelligence for the field.